Welcome to the Prophesee Research Library, where academic innovation meets the world’s most advanced event-based vision technologies

We have brought together groundbreaking research from scholars who are pushing the boundaries with Prophesee Event-based Vision technologies to inspire collaboration and drive forward new breakthroughs in the academic community.

Introducing Prophesee Research Library, the largest curation of academic papers, leveraging Prophesee event-based vision.

Together, let’s reveal the invisible and shape the future of Computer Vision.

An Event-Based Opto-Tactile Skin

This paper presents an event-based opto-tactile skin, a neuromorphic tactile sensing system that integrates dual dynamic vision sensors (DVS) with a flexible optical waveguide to enable high-speed, low-latency touch detection over large areas of soft robotic surfaces. The system leverages sparse event streams for press localization, using clustering-based triangulation to achieve accurate tactile mapping with minimal computational overhead. Experimental results demonstrate reliable contact detection and localization across a 100 cm² sensing area, highlighting its potential for real-time, energy-efficient soft robotics applications.

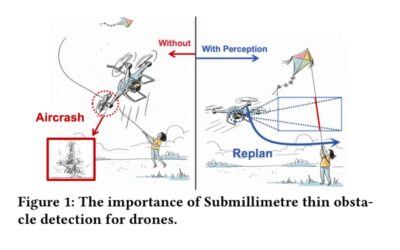

Thin Obstacle Detection for Drone Flight Safety

This paper introduces SkyShield, an event-driven framework for detecting submillimeter-scale thin obstacles—such as steel wires and kite strings—that endanger autonomous drones in complex environments. Using the high temporal resolution of event-based cameras, SkyShield leverages a lightweight U-Net and a novel Dice-Contour Regularization Loss to accurately capture thin structures in event streams. Experiment results show a mean F1 score of 0.7088 with 21.2 ms latency, making the approach well suited for real-time, edge, and mobile drone applications.

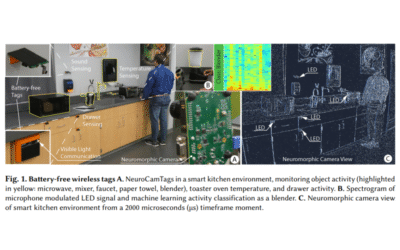

NeuroCamTags: Long-Range, Battery-free, Wireless Sensing with Neuromorphic Cameras

In this paper, NeuroCamTags introduces a battery-free platform designed to detect a range of human interactions and activities in entire rooms and floors without batteries. The system comprises low-cost tags that harvest ambient light energy and utilize high-frequency LED modulation for wireless communication. Visual signals are captured by a neuromorphic camera with high temporal resolution. NeuroCamTags enables localization and identification of multiple tags, offering battery-free sensing for temperature, contact, button presses, key presses, and sound cues, with accurate detection up to 200 feet.

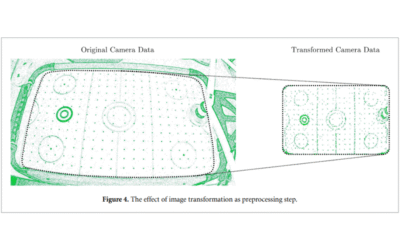

Low-latency neuromorphic air hockey player

This paper focuses on using spiking neural networks (SNNs) to control a robotic manipulator in an air-hockey game. The system processes data from an event-based camera, tracking the puck’s movements and responding to a human player in real time. It demonstrates the potential of SNNs to perform fast, low-power, real-time tasks on massively parallel hardware. The air-hockey platform offers a versatile testbed for evaluating neuromorphic systems and exploring advanced algorithms, including trajectory prediction and adaptive learning, to enhance real-time decision-making and control.

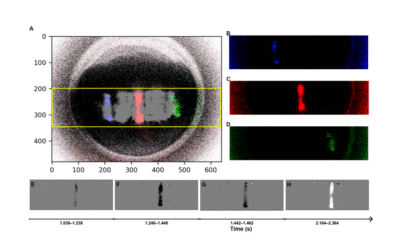

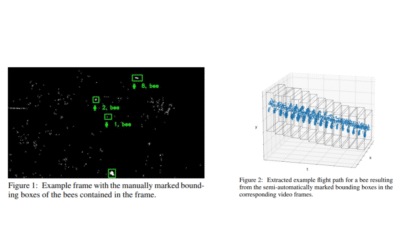

Features for Classifying Insect Trajectories in Event Camera Recordings

In this paper, the focus is on classifying insect trajectories recorded with a stereo event-camera setup. The steps to generate a labeled dataset of trajectory segments are presented, along with methods for propagating labels to unlabelled trajectories. Features are extracted using FoldingNet and PointNet++ on trajectory point clouds, with dimensionality reduction via t-SNE. PointNet++ features form clusters corresponding to insect groups, achieving 90.7% classification accuracy across five groups. Algorithms for estimating insect speed and size are also developed as additional features.

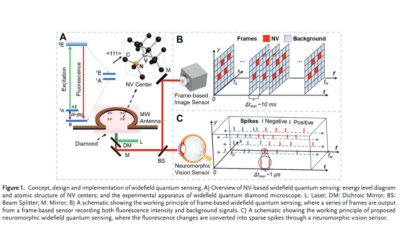

Widefield Diamond Quantum Sensing with Neuromorphic Vision Sensors

In this paper, a neuromorphic vision sensor encodes fluorescence changes in diamonds into spikes for optically detected magnetic resonance. This enables reduced data volume and latency, wide dynamic range, high temporal resolution, and excellent signal-to-background ratio, improving widefield quantum sensing performance. Experiments with an off-the-shelf event camera demonstrate significant temporal resolution gains while maintaining precision comparable to specialized frame-based approaches, and the technology successfully monitors dynamically modulated laser heating of gold nanoparticles, providing new insights for high-precision, low-latency widefield quantum sensing.

Don’t miss the next story.

Subscribe to our newsletter!

INVENTORS AROUND THE WORLD

Feb 2025