AN EVENT-BASED PERCEPTION PIPELINE FOR A TABLE TENNIS ROBOT

UNIVERSITY OF TUBINGEN & ISTITUTO ITALIANO DI TECNOLOGIA

Andreas Ziegler, Thomas Gossard, Arren Glover, Andreas Zell

ABSTRACT

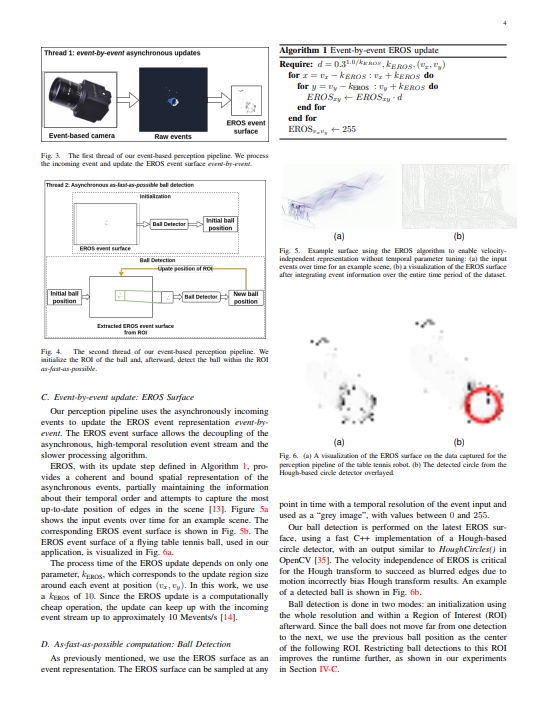

Table tennis robots gained traction over the last years and have become a popular research challenge for control and perception algorithms. Fast and accurate ball detection is crucial for enabling a robotic arm to rally the ball back successfully. So far, most table tennis robots use conventional, frame-based cameras for the perception pipeline. However, frame-based cameras suffer from motion blur if the frame rate is not high enough for fast-moving objects. Event-based cameras, on the other hand, do not have this drawback since pixels report changes in intensity asynchronously and independently, leading to an event stream with a temporal resolution on the order of µs. To the best of our knowledge, we present the first realtime perception pipeline for a table tennis robot that uses only event-based cameras. We show that compared to a frame-based pipeline, event-based perception pipelines have an update rate which is an order of magnitude higher. This is beneficial for the estimation and prediction of the ball’s position, velocity, and spin, resulting in lower mean errors and uncertainties. These improvements are an advantage for the robot control, which has to be fast, given the short time a table tennis ball is flying until the robot has to hit back.